Articles

IBM’s Enterprise Transformation — Finance Technology

- By Josephine Schweiloch, Director of Data Science & Technology, IBM Finance

- Published: 8/20/2024

Finance teams play a crucial role in providing insights, improving efficiency and uncovering new opportunities to drive business growth. At IBM, our finance teams are on a multi-year journey to simplify, eliminate and automate repetitive tasks so that we can focus on high-value and strategic work. As the Director of Data Science and Technology in IBM Finance, I lead the organization responsible for driving this transformation. We are technologists who also have professional experience in core finance functions, and we use technologies including generative AI, data science and automation.

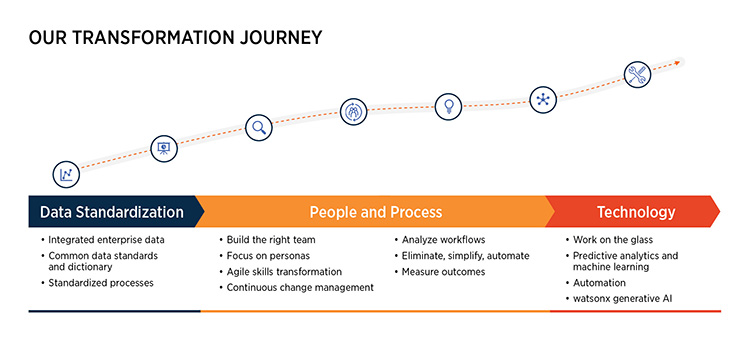

Throughout IBM’s transformation journey, we have continually changed the way we work. We simplify processes, adopt new tools and technology, and grow analytical and technical skills. Like most finance teams, we have more transformation ideas than we have the bandwidth to deliver. Some of our high-impact transformations include journal automation, touchless financial forecasting and intelligent pricing approvals. We use the IBM Garage Methodology to manage our project pipeline. This end-to-end model for accelerating digital transformation at scale provides us with the practices, technologies and expertise we need to rapidly turn ideas into business value. It also allows us to start small and then determine whether to scale or discontinue a transformation project.

A common thread throughout our Data Science and Technology initiatives is a focus on process and workflow transformation. We stay in concert with the business to envision and enable the ideal future state. Simply using technology to automate inefficient or inconsistent processes would be a fool’s errand that creates more problems than it solves. To avoid that, we start by examining workflows and asking these questions: What problem are we really trying to solve? Is this a business or a technology problem? How are people currently doing the work? What elements can we eliminate or simplify before we consider automation? Can a single global process replace disparate regional or functional processes?

“Touchless” (or “Low-touch”) Financial Forecasting

A good example of this is IBM’s Touchless Forecasting, a predictive tool that uses machine learning and AI to accelerate and simplify the financial forecasting process. Transformation at this level doesn’t happen overnight. In data science and AI projects, it’s common to pause, learn and course-correct. The creation of Touchless Forecasting was no different. We experienced some great beginnings, a few false starts and many pivots.

We began building Touchless Forecasting about three years ago, focusing first on a small area of the business. Through research, experimentation, testing and stakeholder feedback, we developed a fully automated forecasting pipeline that worked and could be scaled. Today, we forecast 70,000 different data points a month using a collection of data science models. Our threshold for forecast accuracy is 95%, and in many areas, we realize 98% or greater. We routinely backtest our modeled forecasts; models returning below 95% accuracy are immediately investigated to determine the root cause and take action accordingly.

I often get questions about whether we are aiming for 100% accuracy in our predictive forecasts. In short, no, we are not. Every business must decide for itself, with stakeholder input, on the minimum acceptable level of accuracy for its intended use cases. I urge you to keep in mind that maniacal precision is a red herring. You don’t need perfection; you need a forecast that is lean enough to deliver productivity benefits and accurate enough to drive business insights.

Data Standardization

To understand how we built Touchless Forecasting, it is helpful to back up and understand how IBM invested in managing enterprise data. We built a robust enterprise data environment called Enterprise Performance Management (EPM) that includes not only finance data but also HR, sales and operational data; it is our single trusted source of information across the organization. The data has been democratized, meaning anyone with a business need and security rights can access it on a “single pane of glass.” This was a long, complex effort, but the benefits it yielded are priceless. Most critically, we have eliminated data debates from our management system. Now, when we start a finance discussion at IBM, everyone has pre-agreed that we have one view of factual data, and it exists in this enterprise data environment.

Critical to this effort was building one standardized enterprise taxonomy (our Enterprise Data Standards) that is consistent across the entire company. We standardized data sources, metric definitions and naming conventions across all systems. For example, no more coding “North America” with endless variations such as NA, N Amer, N-O-A-M-E-R. This allows our data to be easily connected and enables disparate systems to talk to each other. IBM’s Enterprise Data office, which partners with representatives from every business unit, governs this taxonomy and supports the business units in modifying existing or onboarding new data.

People

With the data foundations in place, we used the “people, process, technology” approach to create Touchless Financial Forecasting. First, we assembled a small core technology team made up of professional data scientists and engineers. While it is critical to have the right skills and experience in your team, it is equally critical to not become paralyzed trying to build the “perfect team.” Especially in a tight labor market, it can be difficult to recruit data scientists and data engineers, and budget constraints may limit how quickly you can grow. Our recommendation is to start small and develop a long-term hiring plan that allows you to incrementally add key skills over time as you scale the technology function.

In parallel, we cultivated relationships with change agents across the business units who would be our partners in defining workflows and processes. Every organization contains at least a few “tech champions” or “early adopters” who are enthusiastic about new ways of working. In a finance organization, these tend to be self-taught Excel experts who build exceptional pivot tables and write the most complex nested formulas imaginable. (I suspect as you read that sentence, at least one colleague’s name came to mind!)

We focus on engaging and nurturing these tech champions, empowering them to contribute to the development of our tools and act as change agents and advocates across the business. Recently, most of these champions have become passionate users of generative AI. Many are now contributing back to IBM’s open-source AI model called Granite, helping to further enhance the model’s financial acumen.

Continuous skill development and education are also key. Not every person in the organization is, or should be, a data scientist, but everyone needs to understand how to use technology. Data science and AI are not magic; they are math and computer science. Stakeholders who understand this are better able to envision the current capabilities of data science and the problems it can solve for them.

IBM works hard to foster a culture of continuous skill development and learning, making sure employees know how to use our own technology and understand how the industry is evolving. In our recent all-company watsonx AI Challenge, 178,000 IBMers from 128 countries came together to put AI to work and created 11,500 new AI projects. This challenge allowed us to build deep skills across the company and drive tremendous enthusiasm for the power of generative AI.

Our Finance AI guild, open to all IBMers, is an internal network of champions who collaborate to shares the latest news about AI technology and use cases. We have robust skills training and encourage people to build the skills needed to adapt to the changing AI-driven business landscape. Engagement all the way up and down the stakeholder ladder, from analyst to senior executive, is critical to ensure alignment and value realization.

Technology

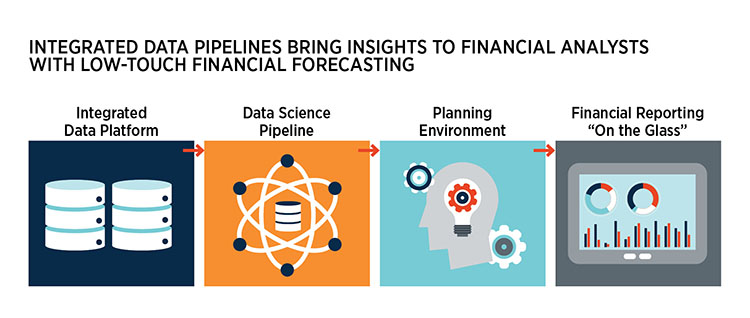

The goal of automation is typically to improve process efficiency and reliability. In the case of Touchless Forecasting, without automation, we would simply be moving work from one team (Financial Analysts) to another (Finance Technology). To avoid this, we focused on building automated end-to-end data pipelines that would perform all tasks with no human interaction.

The four main stages of the data science pipeline are data acquisition, data preparation, model execution, and data output. In the first two stages, data is automatically retrieved from trusted sources, cleaned and organized for modeling. In the third stage, various machine learning models are run, and forecast predictions are generated. In the final stage, those predictions are sent to IBM Planning Analytics, where the data is stored and made available to financial analysts. The entire pipeline is written primarily in Python code, which is containerized and deployed on IBM Cloud. A Machine Learning Operations (MLOps) layer runs across all the pipelines to ensure security, resiliency and error handling.

There are several different data science models used in the model execution stage, depending on the nature of the data. While all models use a blend of historical and real-time data, different models have different strengths and weaknesses. Our approach allows model selection to vary based on patterns in the data, like seasonality. While it is important to keep things as simple as possible, it is equally important to select the right tool for the job.

We have also started to leverage IBM’s generative AI platform, watsonx, to provide analysts with more insights into the modeled forecasts. They can interact with the data using natural language prompts in a watsonx Assistant chat interface, powered by watsonx.ai and IBM’s Granite model, to gain forecast insights and explanations.

Overall, this data and forecasting transformation has led to a 40% productivity gain in our FP&A community because we no longer have financial analysts wasting their time gathering, cleaning and negotiating around data. As a result, they can focus their time and expertise on higher value and more complex financial analysis.

Summary

The finance domain is ripe for data science and automation solutions to improve efficiency, productivity and reliability. While the traditional financial analyst role is still an important part of any company’s finance function, building a data science and technology capability in finance can help realize these benefits more quickly. At IBM, our finance transformation journey has been iterative and has focused on first building a trusted enterprise data platform, then adding the people, process and technology to take advantage of the vast amounts of data we have available. Every company is different, but these stages of growth and evolution may serve as useful guideposts for any finance organization interested in moving to the next level of automation.

AFP FP&A Guide to AI-Powered Finance

Get more on this topic. Whether you're just beginning to explore AI's potential or seeking a clear path forward, this guide offers practical steps to help you confidently embark on your AI journey.

Copyright © 2024 Association for Financial Professionals, Inc.

All rights reserved.