Articles

Use Cases for Generative AI

- By Jesse Todd, Director, Cross-Industry Transformation, Microsoft

- Published: 8/20/2024

A Primer on How GenAI Works

A large language model (LLM) is a type of artificial intelligence that can process and produce natural language texts. It learns from a massive amount of text data, such as books, articles and web pages, and discovers the patterns and rules of language from them. It can do various tasks, such as answering questions, summarizing texts and writing essays. It is helpful to think of a large language model as a language calculator looking for patterns to calculate the most probable word choice to format a response.

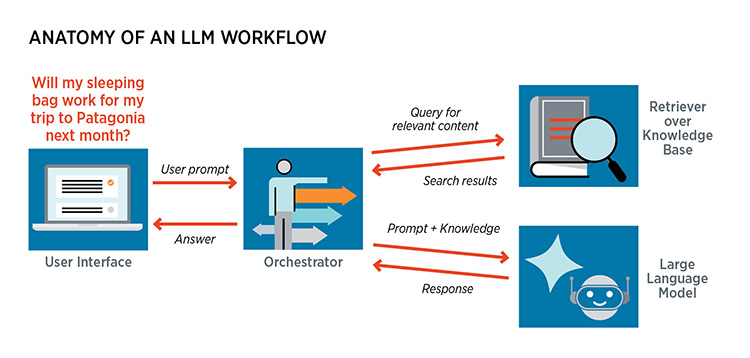

- The user interface is where you interact with the model in some way, most commonly as a prompt that asks a question to the model.

- Example: Will my sleeping bag work for my trip to Patagonia next month?

- Prompt engineering is improving how you write questions to an LLM to get the answers you need.

- The orchestrator is the brain of the application, trained to interpret the user's question based on perceived intent, context and goals; it converts the question to a computer language query to apply against a knowledge source.

- Example: The orchestration may recognize that the prompt is asking about warmth and look up weather conditions, historical weather patterns, forecasts, etc.

- The knowledge base is the specified data source. It may be as broad as the public internet or company-proprietary data. The knowledge base returns this information to the orchestrator, which then passes it over to the large language model.

- The large language model is the interpreter, looking for patterns from the knowledge source that can be converted into a format the user can understand.

About a year ago, Microsoft asked the finance team for different ways they foresaw potentially applying generative AI in their finance roles. The result was a list of over 200 different use cases! Here's a representative sample of that list.

|

Summarize information:

|

Recommend actions:

|

|

Generate content:

|

Simplify tasks:

|

Below is a representative sample of how LLMs could be used across three types of assistants.

Public Solutions

Hundreds of LLMs are available to the public via the internet. They are good for general use and apply publicly available data (i.e., the internet) to respond to prompts. We often think of them as assistants who can research and find an answer to any question you ask.

Here is one simple user story: Let's say I want to understand economic trends impacting customer purchase behavior and, therefore, my forecasts, and I want external or macroeconomic data I could use to create a regression against my internal data.

- Prompt: “What are the economic trends that most impact customer behavior?” The LLM returns a long list with some interesting ideas: Customers are shopping everywhere all at once because they have multiple channels available. Lots of new technology use. Customers are more empowered because they have greater choice than ever before. The rise of the sharing economy. This is telling me a lot about trends but no data.

- Modified prompt: “What are key macroeconomic indicators that most impact customer behavior?” Now, I am getting lists of data types, such as personal income, disposable versus discretionary spending and consumer confidence.

- Modified prompt: “How can I access publicly available consumer confidence data?” I learn that the Conference Board and others publish this data every month, and the LLM provides links to the data.

Now that I have the data, I ask the LLM a technical question, and my prompt is a bit more specific: “How can I run a regression of CCI data against my own? Let's say product revenue for last year in a spreadsheet. Please provide me with an algorithm and functions that I can use.” It tells me the steps to take, how to prepare my data, how to execute a linear regression, and even how to interpret results, such as the R-squared, F and coefficients. All of this information is publicly available.

Commercial Solutions

The public tools have some capabilities, but commercial solutions can house their own orchestration engines optimized for specific tasks, maintain privacy over your prompts, connect to specific or specialized knowledge bases such as those inside your company, and return answers that are much more suited to completing your task. With commercial solutions, we need to start moving into different types of assistants or copilots that reside inside applications and accelerate the productivity of those applications. The number of these tools and the skills built into them are continuously growing.

Let’s walk through an example with a copilot in Excel. Note that there is a similar capability in Google Sheets using Duet. We start with a basic data table in Excel, and on the right side of the screen is a user interface where I can prompt the copilot, and the copilot can also prompt me by offering ideas for actions, such as: add a column that combines campaign owner and campaign name. Maybe I don’t want that; instead, I will tell it to add a column that calculates percentage of total users who are also engaged users. It shows me the formula it is considering in the Excel script and asks if I want to insert it into the table. I say yes, and there it is.

Next, I want a visual to tell the story of how the channels compare, so I prompt the copilot: show me a chart with total revenue by campaign type. It shows revenue by digital marketing, customer experience and brand marketing. The copilot also explains its logic and what it has created: It's called a custom bar chart showing the total Revenue by Campaign Type. The chart shows the total revenue for Digital was …” Then it prompts me back and says, given this analysis, people typically like to look for outliers.

Custom Solutions

The third scenario is one where you have pushed your assistants and copilots to their limits, and they just don’t have the capability to support a required task, perhaps because the activity is specialized or requires data or actions across multiple applications in the organization. This may require a custom-build LLM where, internally, your company controls the orchestrator to build the required skills and controls the knowledge bases and the LLM.

Here are some examples from the FP&A community on their custom-build LLMs:

- “I’m building an open-source language model into a financial dashboard to create a possibility of asking it for daily insights.”

- “We are using it to detect anomalies in data that may indicate fraud.”

- “We are using loading various public filings into an LLM and having it summarize the documents and pull out key findings.”

- “At our company, a natural language program writes the first draft of a budget variance report.”

AFP FP&A Guide to AI-Powered Finance

Get more on this topic. Whether you're just beginning to explore AI's potential or seeking a clear path forward, this guide offers practical steps to help you confidently embark on your AI journey.

Copyright © 2024 Association for Financial Professionals, Inc.

All rights reserved.